Temporal Event set Modeling

Paper in proceedings of ACML 2023 [PDF link][Presentation][Github]

Abstract

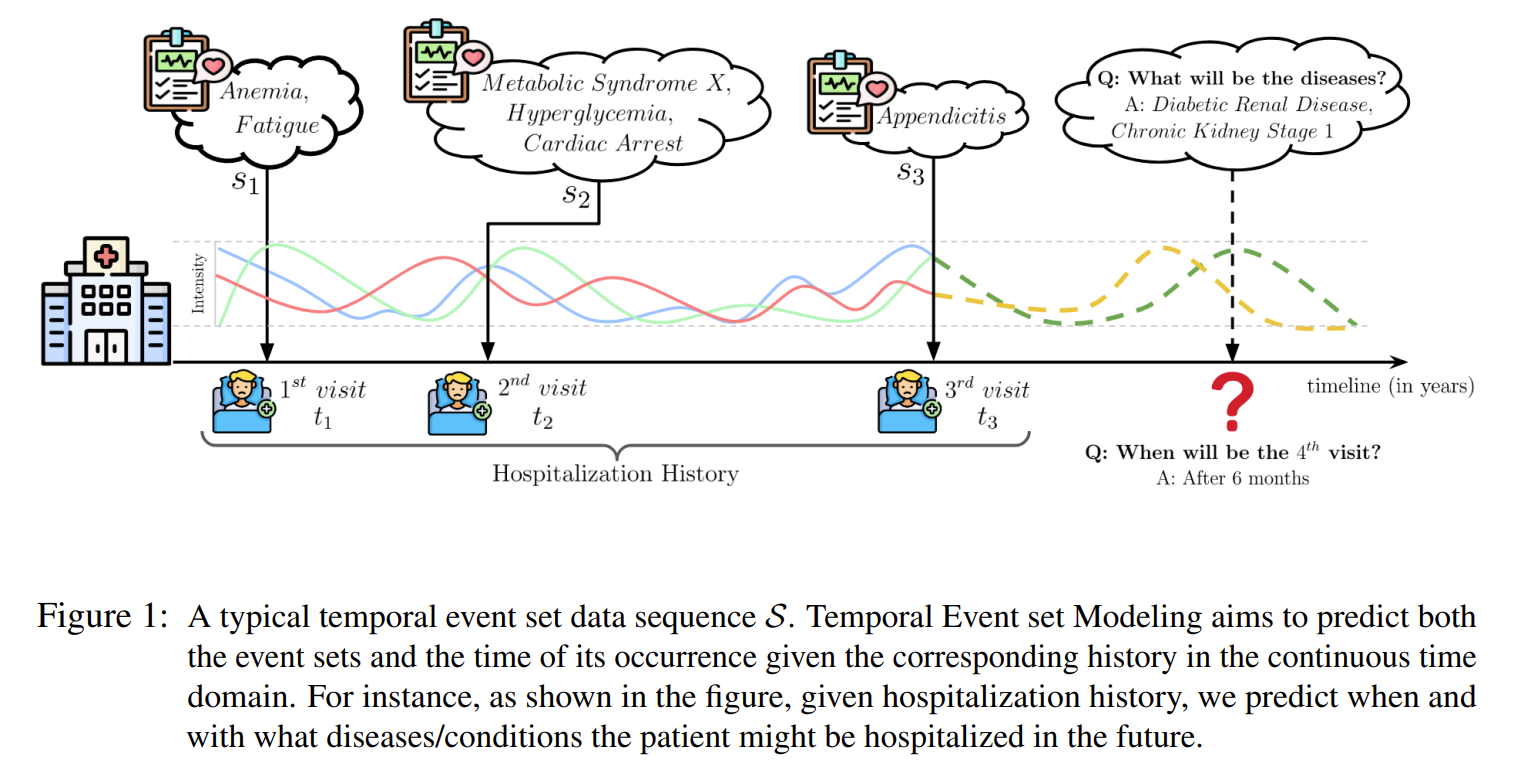

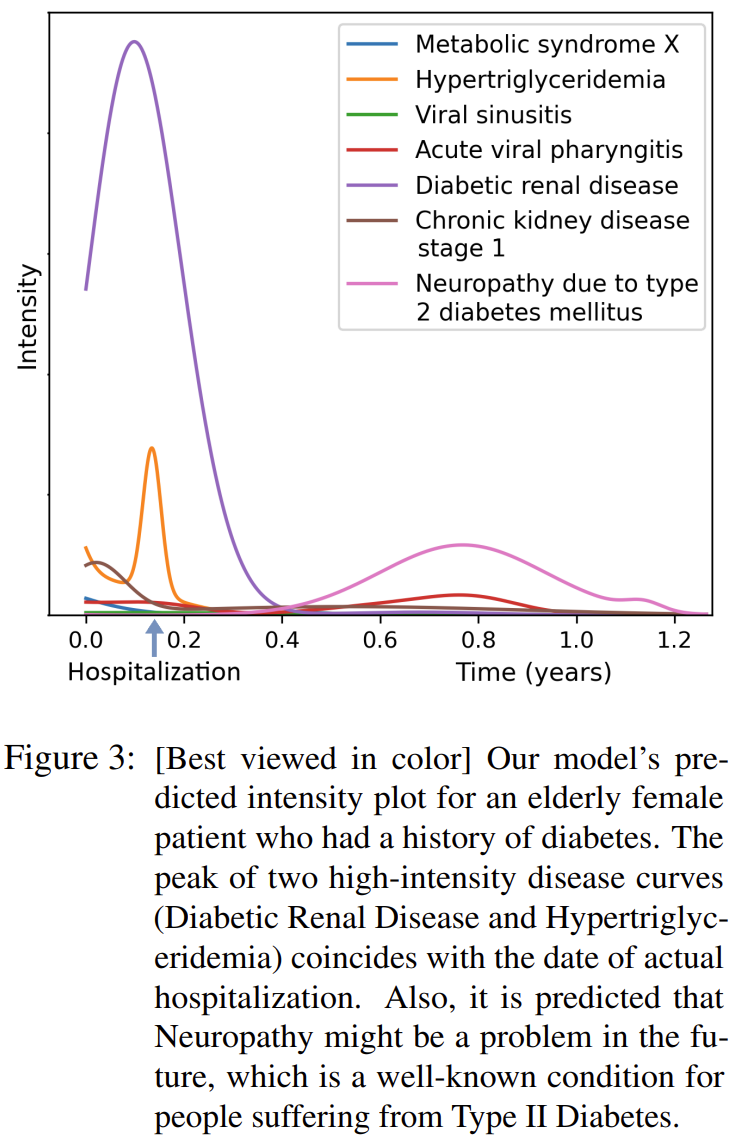

Temporal Point Processes (TPP) play an important role in predicting or forecasting events. Although these problems have been studied extensively, predicting multiple simultaneously occurring events can be challenging. For instance, more often than not, a patient gets admitted to a hospital with multiple conditions at a time. Similarly people buy more than one stock and multiple news breaks out at the same time. Moreover, these events do not occur at discrete time intervals, and forecasting event sets in the continuous time domain remains an open problem. Naïve approaches for extending the existing TPP models for solving this problem lead to dealing with an exponentially large number of events or ignoring set dependencies among events. In this work, we propose a scalable and efficient approach based on TPPs to solve this problem. Our proposed approach incorporates contextual event embeddings, temporal information, and domain features to model the temporal event sets. We demonstrate the effectiveness of our approach through extensive experiments on multiple datasets, showing that our model outperforms existing methods in terms of prediction metrics and computational efficiency. To the best of our knowledge, this is the first work that solves the problem of predicting event set intensities in the continuous time domain by using TPPs.Introduction

Temporal Point Processes (TPPs) are probabilistic generative models for continuous-time event sequences. They have been widely used in modeling real-world event sequences, such as neural spike trains, disease outbreaks, and social media activities.

TPPs can be learned from data using traditional methods [1, 2, 3] and using deep learning [4, 5, 6]. Some of the example use cases include modeling and predicting hospital visits, stock portfolio selection, and shopping basket checkouts.

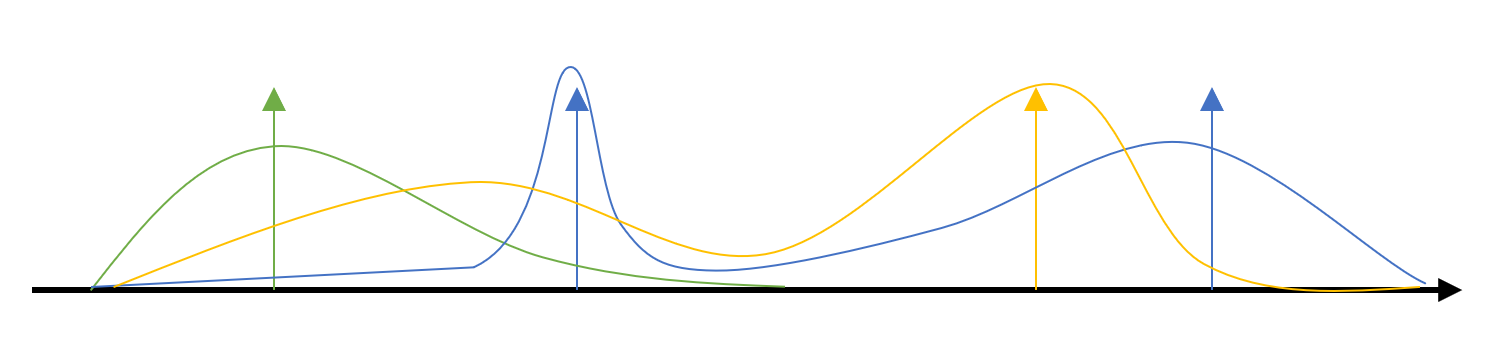

The paper extends the notion of sequence of events in TPPs to a sequence of sets of events. The paper proposes a novel model for predicting the intensity of a set of events in the continuous time domain. The proposed modelling approach is named (Temporal Event Set) TESET Modeling.

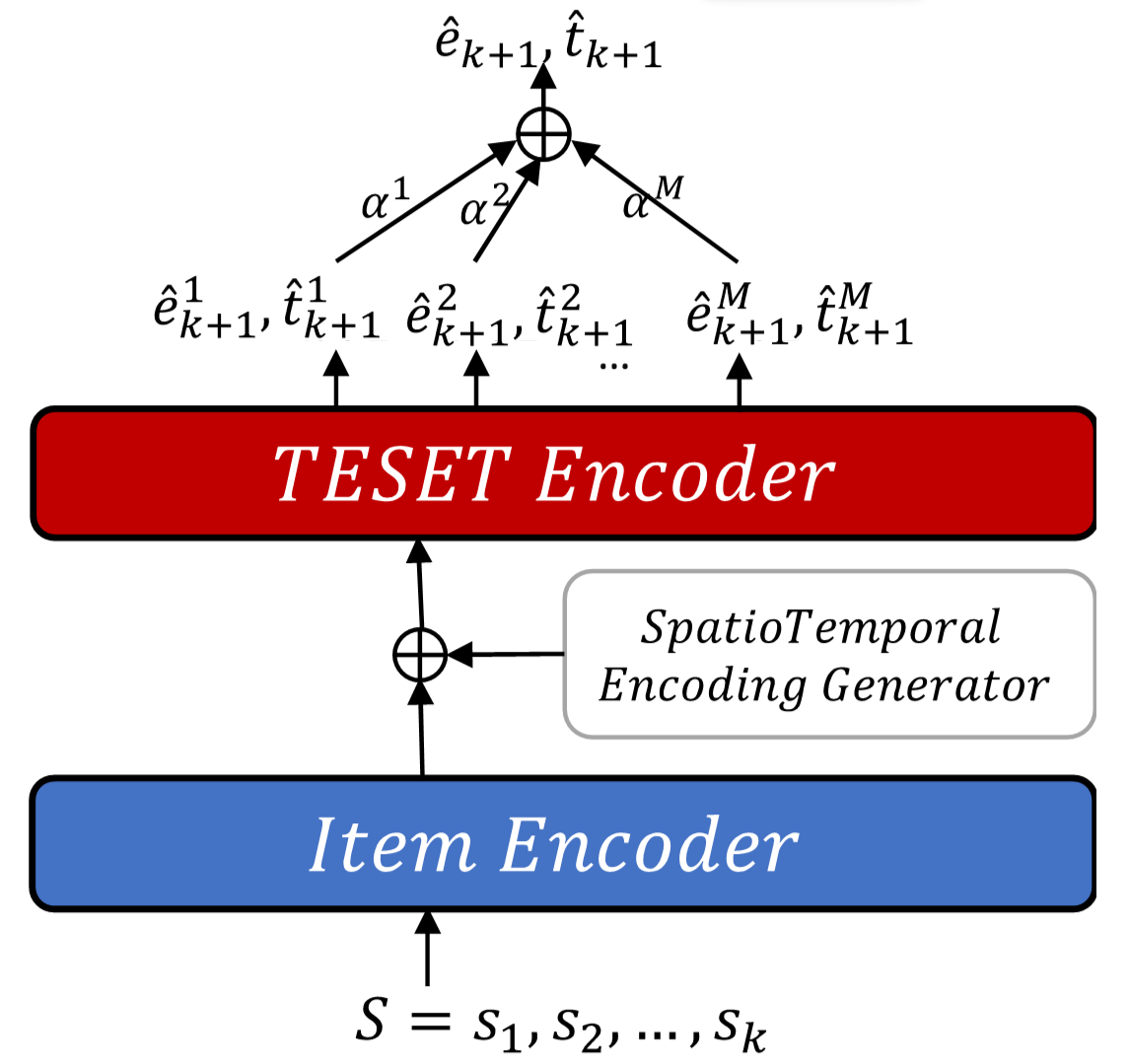

TESET Modeling methodology

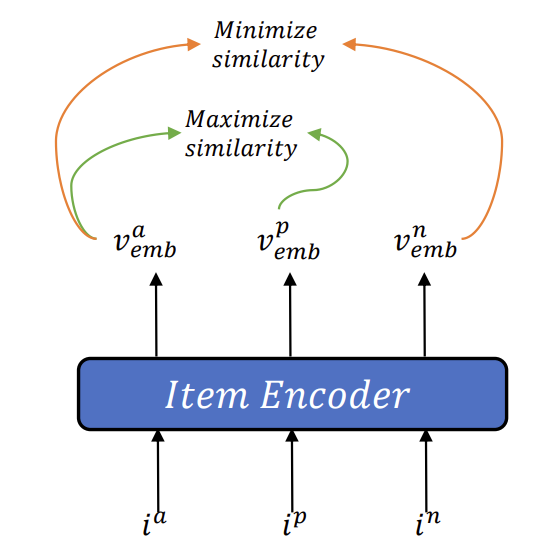

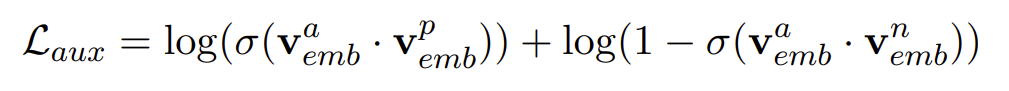

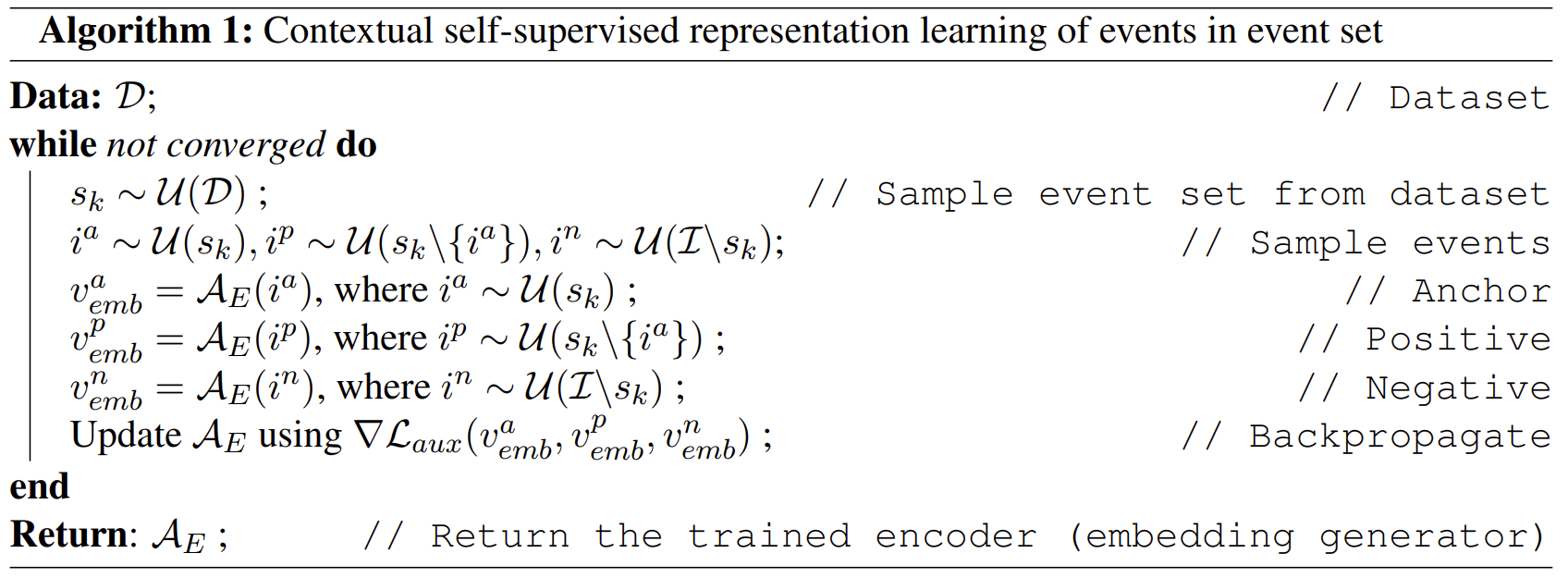

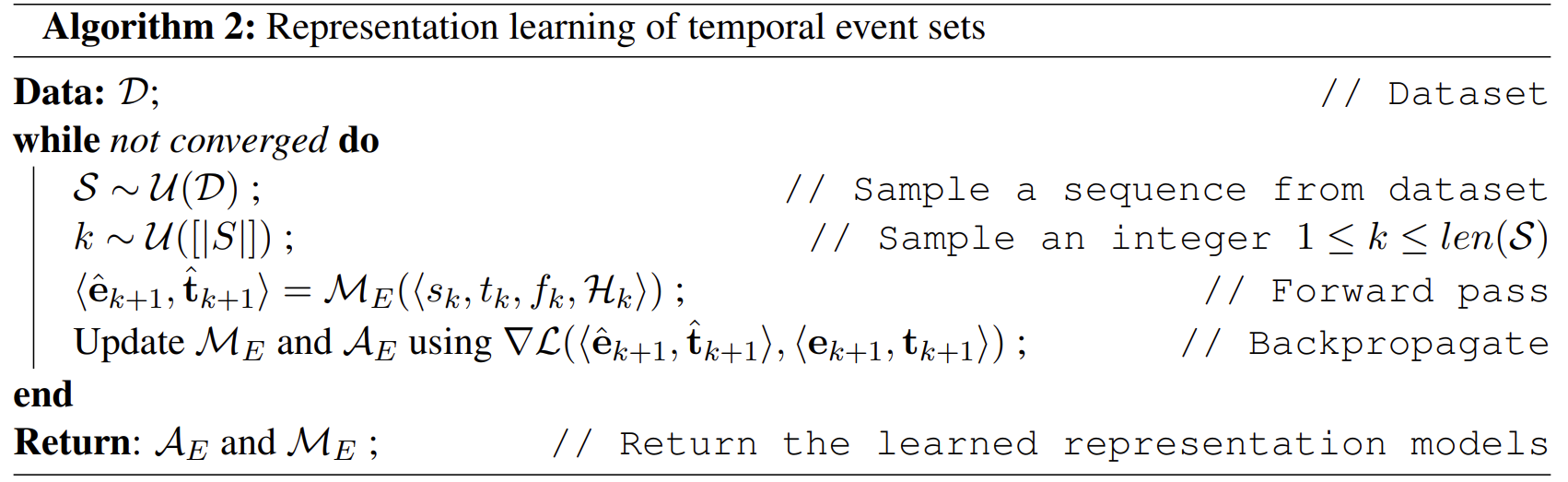

This is a two step process:- Learning the embedding of items in the event sets

- Modeling the temporal dependencies between the event sets

Step 1: Learning item representations

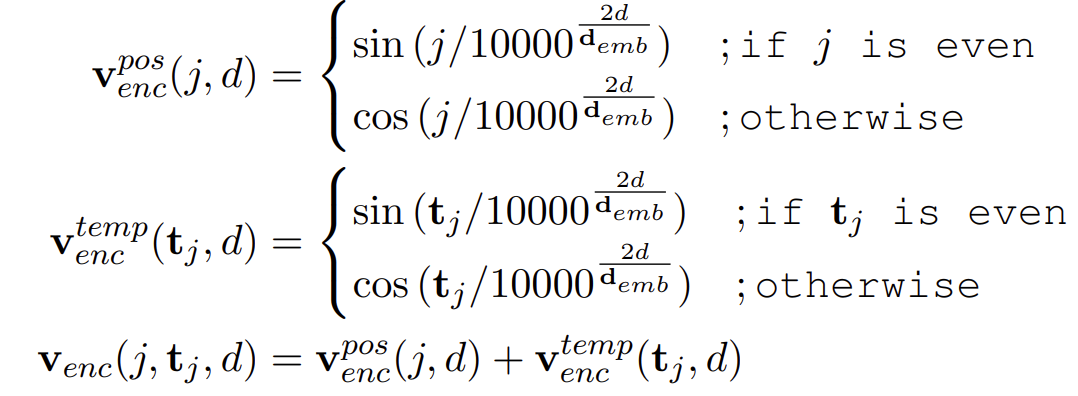

Step 2: Modeling temporal dependencies among Event sets

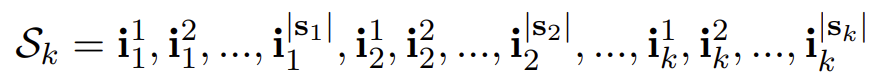

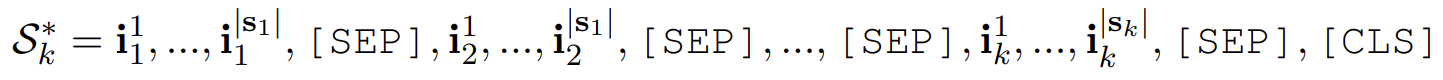

We assume that we are given a temporal event set Sequence as follows:

[CLS] and [SEP] are special tokens used in the transformer literature. [SEP] captures the information for a given event set, and [CLS] captures the global information for the entire sequence.

-

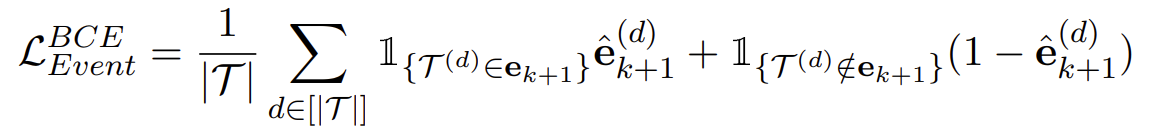

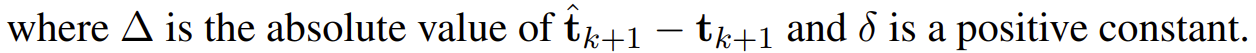

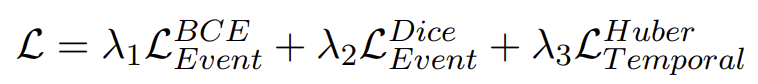

Binary cross entropy loss for predicting the next event set:

-

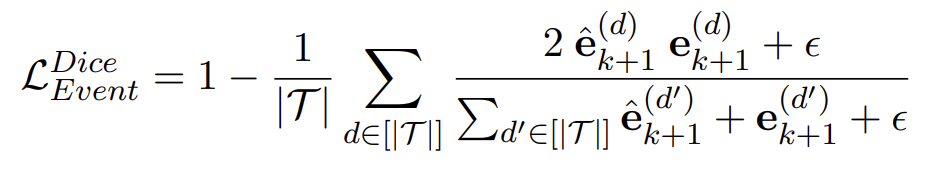

Dice loss for handling class imbalance

-

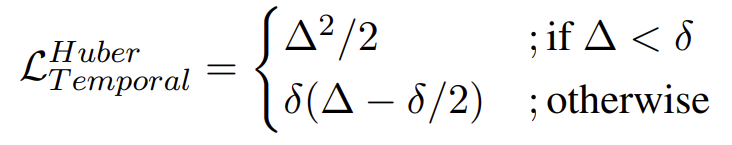

Huber loss for learning the temporal relations

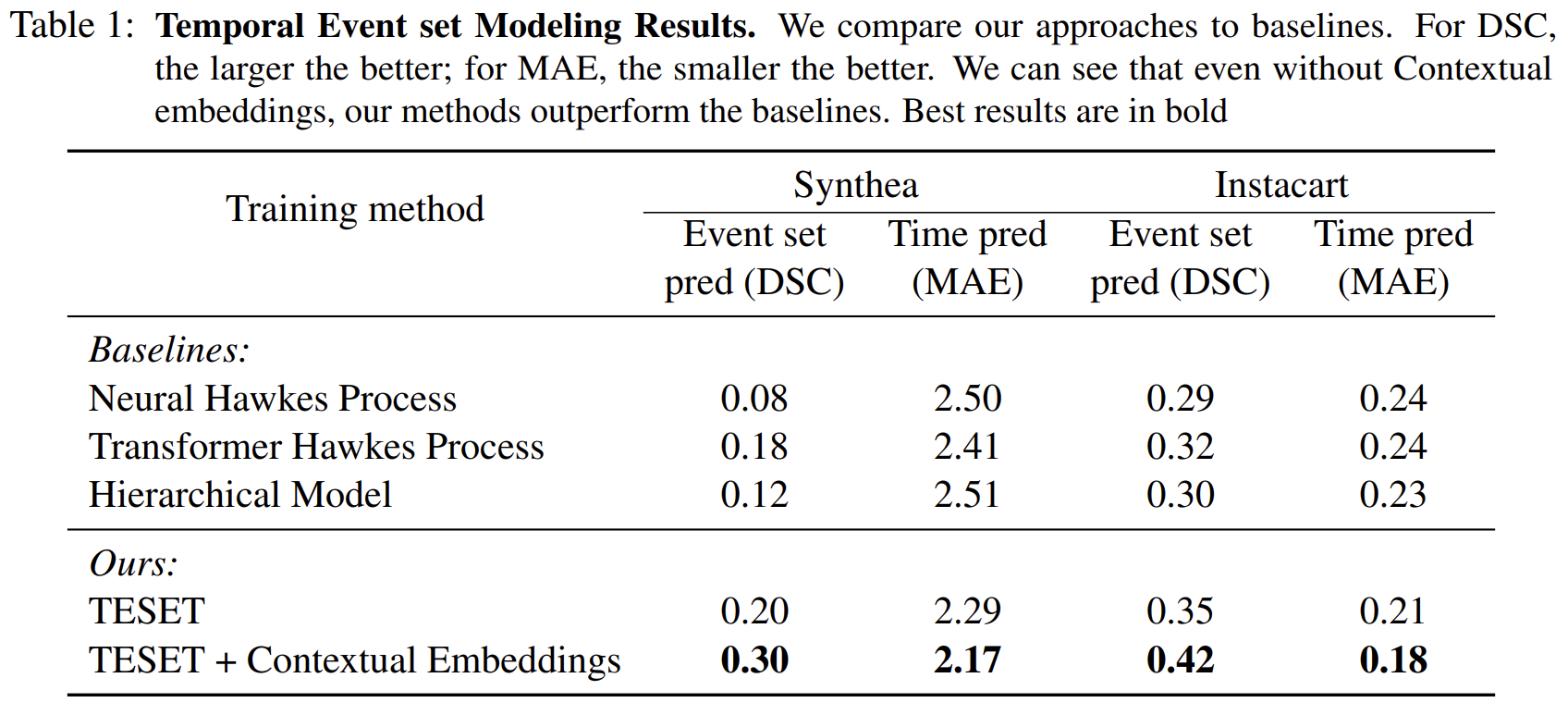

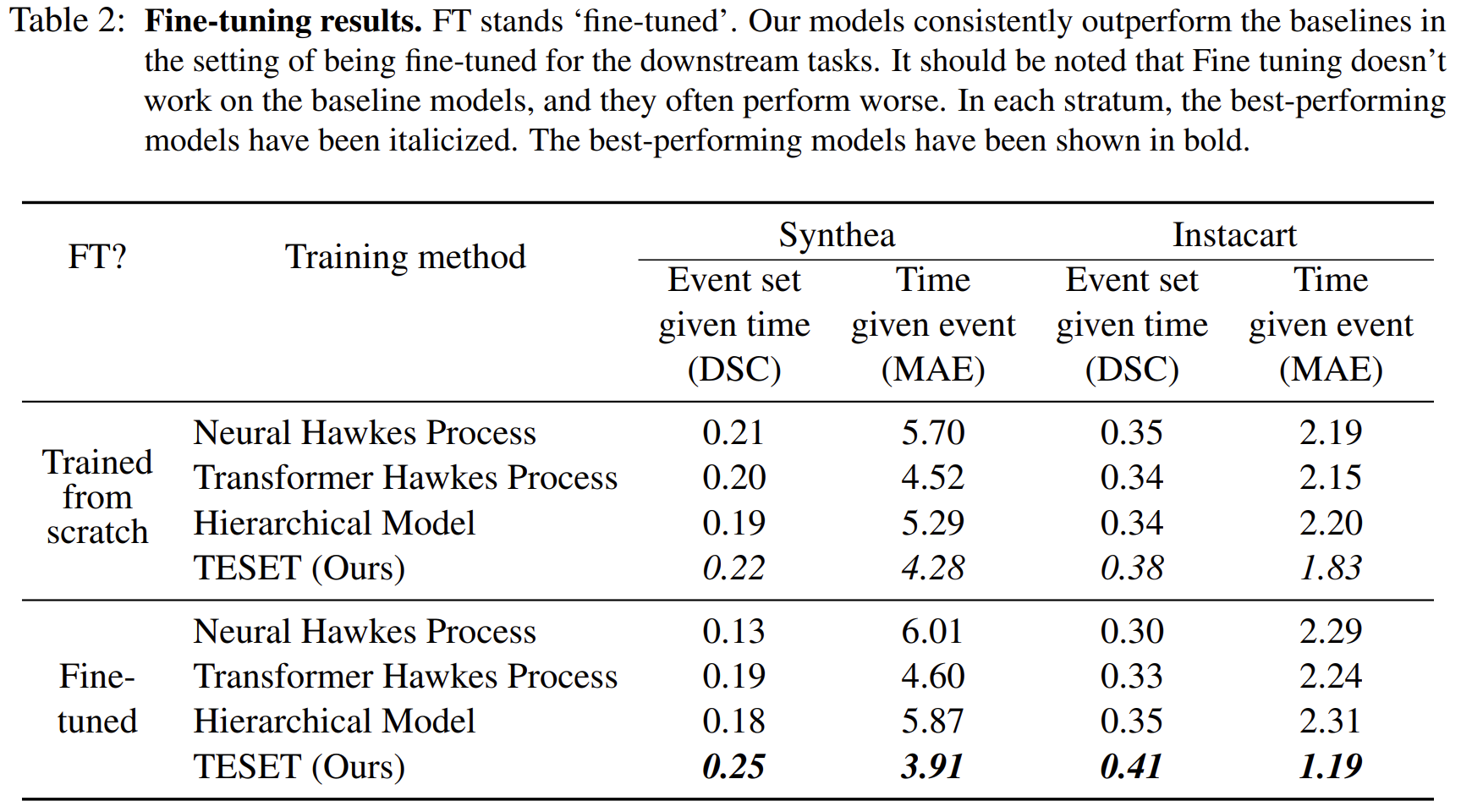

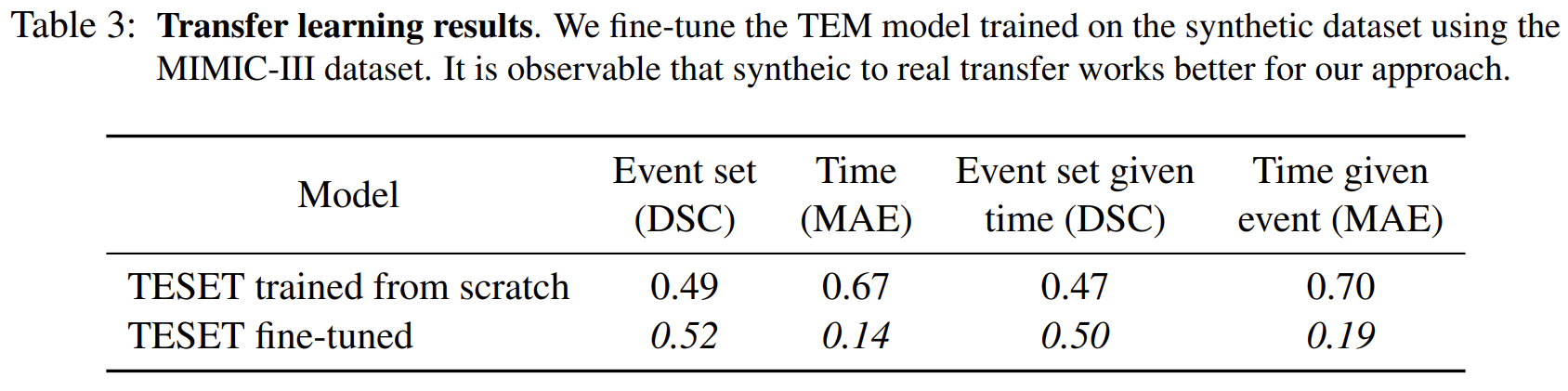

Experiments and Results

- M. Winkel – Poisson processes, generalizations and applications [link]

- T. Beckers – An introduction to Gaussian Process models [link]

- P. J. Laub and others – Hawkes Processes [link]

- Du and others – Recurrent Marked Temporal Point Processes [link]

- Mei and Eisner – The Neural Hawkes Process [link]

- Zuo and others – Transformer Hawkes Process [link]