A unified platform for higher-order interaction modeling in dynamic networks.

Forecasting Multi-Relational and Recursive Interactions in Temporal Networks

Explore Our ResearchUnderstanding relations arising out of interactions among entities can be very difficult, and predicting them is even more challenging. This problem has many applications in various fields, such as financial networks and e-commerce. These relations can involve much more complexities than just involv- ing more than two entities. One such scenario is evolving recursive relations between multiple entities, and so far, this is still an open problem. This work addresses the problem of forecasting higher-order interaction events that can be multirelational and recursive. We pose the problem in the frame- work of representation learning of temporal hypergraphs that can capture complex relationships involving multiple entities. The proposed model, Relational Recursive Hyperedge Temporal Point Process (RRHyperTPP) uses an encoder that learns a dynamic node representation based on the historical interaction patterns and then a hyperedge link prediction- based decoder to model the occurrence of interaction events. These learned representations are then used for downstream tasks involving forecasting the type and time of interactions. The main challenge in learning from hyperedge events is that the number of possible hyperedges grows exponentially with the number of nodes in the network. This will make the computation of negative log-likelihood of the temporal point process expensive, as the calculation of survival function requires a summation over all possible hyperedges. In our work, we develop a noise contrastive estimation method to learn the parameters of our model, and we have experimentally shown that our models perform better than previous state-of-the-art methods for interaction forecasting.

Introduction

We use a multi-relational recursive hyperedges structure to incorporate these complex higher-order interactions. Here, hyperedges can act as nodes in other hyperedges (like CCed or receiver groups in email interactions) and contain relation types indicating their role in the interactions. Then, a TPP is defined with these hyperedges as event types with a conditional intensity function parameterized using representations/embeddings of the nodes and re- lations involved in the interactions.

Furthermore, the previous approaches in TPP-based interaction forecasting use negative sampling to approximate the loss associated with the survival function, which is intractable to calculate due to the huge number of possible event types in the TPP. This can introduce biases in training, especially in these complex interactions. To address these challenges, we propose the model Relational Recursive Hyperedge Temporal Point Process (RRHyperTPP). The following are the contributions of this work:

- Contrastive learning strategy: We propose a contrastive learning strategy for higher-order interaction for hyperedge events in networks. This provides a technique for training the model without maximum likelihood estimation, thereby avoiding the computationally expensive survival function calculation. We establish that the proposed model performs better than previous models that use negative sampling.

- New deep learning architecture: We propose a new deep learning architecture for learning node representation from higher-order interaction. This involves two stages: interaction update and temporal drift. Interaction update is used to revise the node representation when an event involving it occurs by using the features of the interaction. Temporal drift is used to model the evolution of node representation during the interevent period using time projection based on JODIE (Kumar, Zhang, and Leskovec 2019), Neural ODE (Chen et al. 2018), or time embeddings based on Fourier time features (Xu et al. 2020).

- Hyperedge link prediction: We propose a new method for hyperedge link prediction for multi-relational recursive hypergraphs.

- Extensive experiments: Extensive experiments are conducted to show the advantage of the proposed method over existing state-of-the-art models.

Problem Statement

Problem. Given E(t) = {(e1, t1), ..., (en, tn)} historical interactions until time t > tn, we aim to forecast the next interaction (tn+1, en+1), where tn+1 > t > tn. Here, we want to estimate the time tn+1 and the type of interaction en+1.

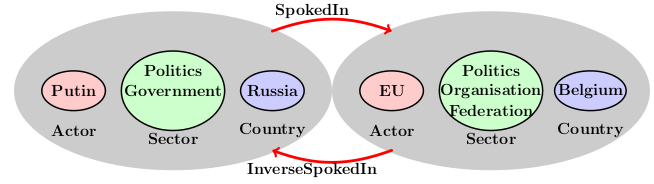

This work mainly focuses on higher-order interactions in communications networks and real-world events stored in source and target entities. The higher-order interaction in communications networks is represented using a recur- sive hyperedge graph of depth one h = {(hi0, ri0)}i=0k0. Here, {ri0}i=03 relations are used to represent the sender, recipients, and carbon-copied recipients’ addresses in case of email exchange and {ri0}i=04 represents the sender, re- ceiver, retweeter, and retweeted nodes in the Twitter network. The higher-order interactions in real-world events are repre- sented using depth two hyperedges h = {(h01, r01), (h11, r11)} and h0/11 = {(hi0, ri0)}i=03. Here, h01, h11 are the two entities involved in the interactions, r01 and r11 are the inverse relation pairs indicating the type of interactions, {ri0}i=03 are relations indicating the actor, sector, and country of the entity. Figure 1 shows an example of this type of higher-order interaction.

Relational Recursive Hyperedge Temporal Point Process (RRHyperTPP) Model

The RRHyperTPP model is a temporal point process (TPP) designed for hypergraphs. It defines event probabilities using a conditional intensity function and a survival function. The likelihood function is optimized using Noise Contrastive Estimation (NCE) instead of Maximum Likelihood Estimation (MLE).

The probability of a hyperedge event h occurring at time t is given by:

Pₕ(t) = λₕ(t) Sₕ(tₚₕ, t)

- λₕ(t): Intensity function.

- Sₕ(tₚₕ, t): Survival function capturing the probability that no event has occurred in [tₚₕ, t].

- The complete likelihood function considers both observed events and survival probabilities.

- Noise Contrastive Estimation (NCE) is used instead of MLE to enhance computational efficiency.

- Introduces noise streams to approximate event distributions.

- Optimizes a contrastive loss function comparing real event distributions with sampled noise.

- Negative hyperedges are generated using a corruption model for better generalization.

- Diverse negative samples are ensured by replacing parts of real hyperedges.

- Nodes have time-dependent embeddings V(t).

- Captures dynamic node evolution via a continuous-time recurrent neural network (RNN).

- Two stages:

- Node Update: Updates embeddings when an event occurs.

- Drift: Embeddings evolve over time between events.

- Uses the AdamW optimizer for parameter updates.

- Negative samples are incorporated into both event likelihood loss and supervised noise contrastive loss.

Experimental Results

Dataset

We evaluate our model on a real-world dataset of email interactions and Twitter retweet events. The dataset contains information about the sender, receiver, and carbon-copied recipients in the case of email interactions and sender, receiver, retweeter, and retweeted nodes in the case of Twitter retweets.

Publication

Deep Representation Learning for Forecasting Recursive and Multi-Relational Events in Temporal NetworksCode

Our code is available on GitHub.

Authors

- Tony Gracious

- Ambedkar Dukkipati